As we know, learning-based policies work well in distribution, and are prone to adversarial attacks outside this distribution. One way to approach this is to generate adversarial human motions. But do they actually reveal of the policy's brittleness? In this case, the adversarial human learns to "hides" their arm so the robot can't reach the itch spot and fails. Since this is improbable to happen to normal users, and preventable with safety measures, the failure is acceptable and not really showing policy's brittleness

What we need is not just adversarial human motion, but adversarial and "natural". When optimizing the human motion this way, we find a much more concerning example: a small jerk in the arm could send the robot flailing and spinning around wildly. Since this may well happen in daily interactions, it is catastrophic and needs to be addressed.

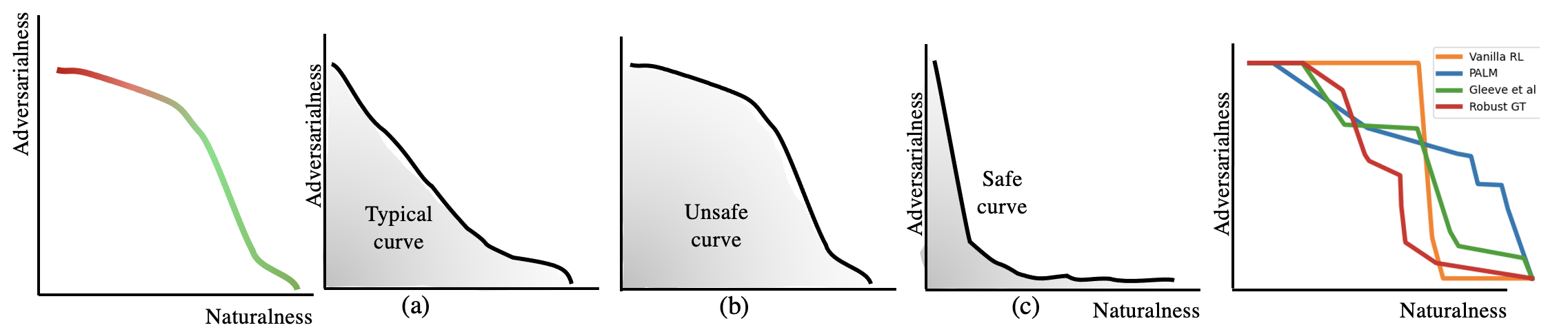

But how natural/unnatural do we allow the motions to be? We propose looking at the entire natural-adversarial pareto frontier, which represents the robot's performance under all possible types of human motions. Our method called RIGID presents a way to efficiently compute this pareto frontier.

Setting $\lambda \rightarrow \infty$ results in a policy $\tilde{\pi}_H$ that closely resembles $\pi_H$ and yields a high reward. On the other hand, setting $\lambda = 0$ leads to a policy $\tilde{\pi}_H$ that is purely adversarial and causes harm to the assistive task by inverting the environment reward. By selecting $\lambda \in [0, \infty)$, we arrive at a spectrum of human motions that interpolate between adversarial and natural. This is meaningful in Human-Robot Interaction because while we may not need to worry about purely adversarial human behaviour, as they are less likely to happen in reality, we need to take precaution against human behaviour that appears natural, yet causes robot failures.